In this article, we’re breaking down what’s really powering ChatGPT, Claude, and all the AI tools you keep hearing about.

It’s something called an LLM, or Large Language Model.

By the time we're done, you’ll understand what that actually means — in plain English — and how you can start using one yourself!

What We’ll Cover:

Neural Networks

Deep Learning

Deep Learning Architectures

Transformers

LLMs

Types of LLMs

How to Use LLMs in Python

Fine-Tuning and RAGs

If you prefer watching a video to reading a blog post, you can check out my YouTube video, How LLMs Work, here.

1. Neural Networks

At the core of AI is something called a neural network.

Imagine a system where you feed in data on the left and get a prediction on the right. In between are layers of nodes, which act like little decision-makers.

Here’s what happens at each node:

Inputs are multiplied by weights and summed up

The result is passed through an activation function (a function that maps values from one set to another)

That output becomes an input for the next layer

This structure lets the network learn patterns — like the sentiment of a sentence (whether it sounds positive or negative).

2. Deep Learning

When you add more hidden layers to a neural network, you get deep learning.

With just one or two hidden layers, it’s a basic neural network. Add more — typically three or more — and it becomes deep. These additional layers allow the model to learn more complex representations, like grammar, tone, or relationships in text data.

3. Deep Learning Architectures

Deep learning becomes more powerful when you tailor it to specific types of data. These specialized designs are known as architectures. Some popular ones include:

CNNs → Excellent for images

RNNs and LSTMs → Designed for sequential data like text or time series

Transformers → Great for text, code, images, and more

While CNNs are still used for image data, RNNs and LSTMs have largely been replaced by transformers, which handle long-range dependencies much more effectively.

4. Transformers

Introduced in 2017, transformers completely changed the AI landscape.

They’re built on three key components:

Embeddings: Converts words into locations in space. Words with similar meanings end up near each other.

Attention: Incorporates surrounding words to capture context. For example, in the phrase “cold lemonade”, the model learns that “cold” modifies “lemonade.”

Feedforward Neural Networks: Learns deeper relationships (like grammar or idioms) from the text.

Transformers are the foundation behind translation, summarization, code generation — and most importantly, chatbots like ChatGPT.

5. Large Language Models (LLMs)

Now, let’s define an LLM. It’s a transformer-based model trained on a massive amount of text data.

Here’s how the training process works:

The model is shown large amounts of text (books, websites, articles, etc.).

It’s asked: “Given this text, what word comes next?”

It makes a guess. If it’s wrong, it updates its weights.

This cycle repeats — millions or billions of times.

Once trained, all that complexity is hidden. You type something in, the model performs the necessary calculations, and quickly generates a response.

Large = trained on huge datasets

Language = focuses on text

Model = uses deep learning to make predictions

6. Types of LLMs

Not all LLMs are structured the same. There are three categories of LLMs:

Encoder-only → Best for understanding text. You give it a sentence, and it returns a numeric representation of the text that has meaning. Popular LLM: BERT

Decoder-only → Best for generating text. You give it a prompt, and it continues word by word. Popular LLM: GPT (like ChatGPT)

Encoder-decoder → Combines both understanding and generation. Common in translation and summarization tasks. Popular LLM: T5

All of these use the same general transformer architecture behind the scenes, but they differ in how they apply it.

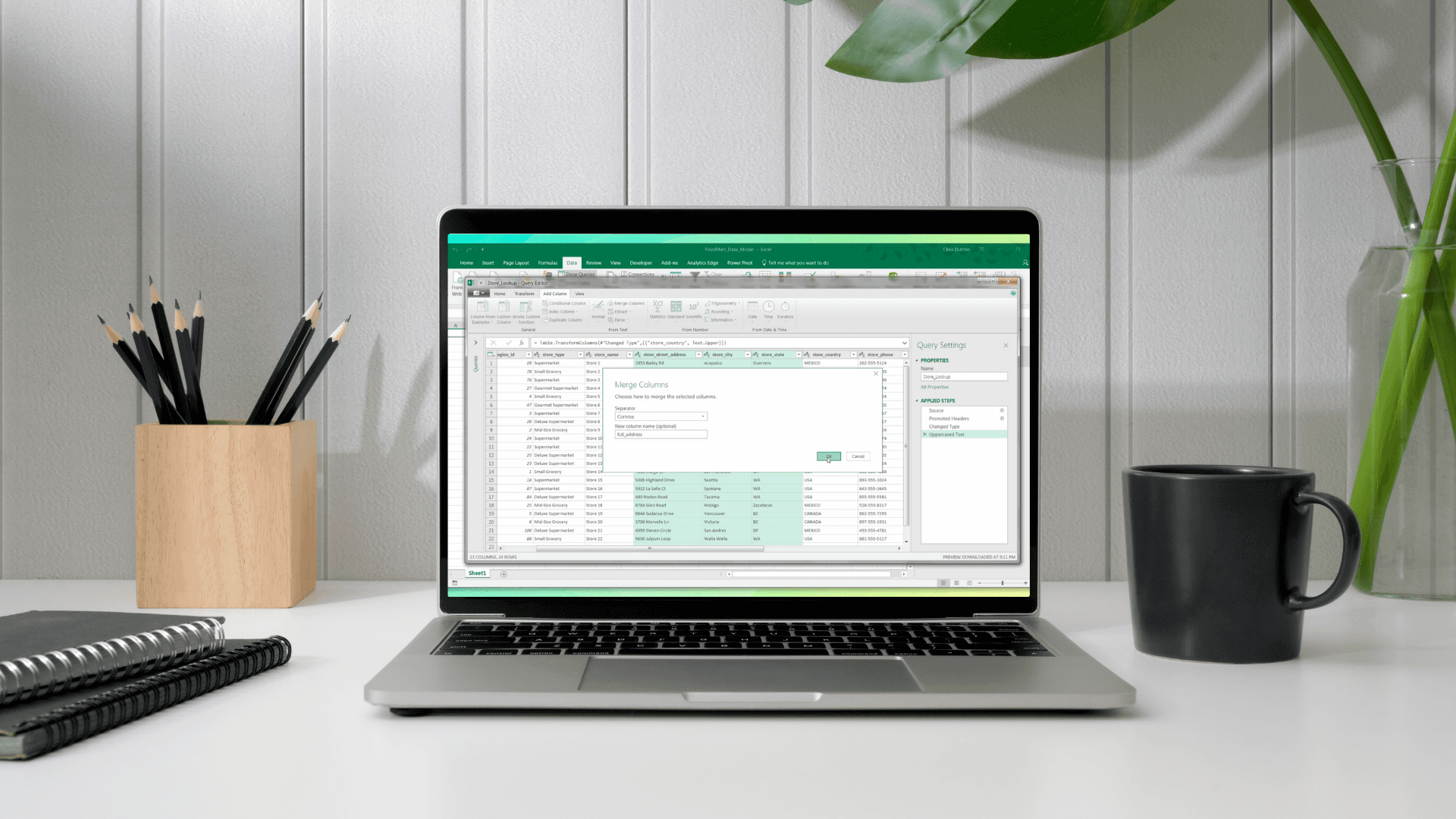

7. Using LLMs in Python

Want to try one yourself? The easiest way is through Hugging Face.

Once you download a pretrained model from Hugging Face’s Model Hub, their Transformers Python library makes it easy to get started. With just a few lines of code, you can:

Run sentiment analysis

Summarize text

Build a chatbot

Most of these models run on PyTorch or TensorFlow under the hood — but you don’t need to worry about learning to code in those libraries unless you want to go deeper and create custom models.

8. Fine-Tuning & RAGs

Sometimes, instead of using a pretrained LLM model as is, you want a model to work better with your specific data. Here are two common approaches:

Fine-Tuning

Take a pretrained model and continue training it on your own examples. For instance, if you're building a customer support bot, you could fine-tune the model on your company’s FAQ data to match your tone and language.

RAG (Retrieval-Augmented Generation)

Combine a language model with an external data source — like a document database or internal wiki. Instead of relying only on its training data, the model can "retrieve" relevant facts before generating a response.

This approach — a base model + your data — is what powers many enterprise AI tools today.

Final Thoughts

We started with neural networks and deep learning, explored transformers & LLMs, and wrapped up with the tools behind models like ChatGPT.

The best part? You don’t need to build these models from scratch. With tools like Hugging Face, you can apply powerful pretrained models to your own data — right in Python.

If you want to learn more, check out my full 22-hour NLP in Python course at Maven Analytics, where we explore all of this in more detail with plenty of hands-on practice.

NEXT WEEK: FREE ACCESS TO OUR DATA SCIENCE PATH!

Looking to learn data science skills?

Join us Monday, July 28 (9 am ET) through Sunday, August 3 (11:59 pm PT) for free access to our completed Python for Data Science Learning Path!

Alice Zhao

Lead Data Science Instructor

Alice Zhao is a seasoned data scientist and author of the book, SQL Pocket Guide, 4th Edition (O'Reilly). She has taught numerous courses in Python, SQL, and R as a data science instructor at Maven Analytics and Metis, and as a co-founder of Best Fit Analytics.